Over the past few years, I’ve adamantly opposed AI graders. In my discipline, I feel that grading needs to be relational to encourage student buy-in.

(I’m writing from the perspective of a college English and Humaities professor, where there are frequently no right answers. I’m also fortunate enough to teach small courses. I won’t generalize aobut other levels, disciplines, and contexts.)

But what about LLM-generated feedback?

I’ve been undecided.

On the one hand, I think that sending our own work into an LLM (if we’re comfortable doing so), is a quick-and-easy way to get feedback. It can get us outside out own head, which is pivotal if we’re sticking to a deadline.

On the other hand, it takes some practice to prevent the LLM from doing a complete overhaul. For many habitual users, it seems obvious to set parameters for the program’s feedback. For my students, not so much. I also worry about students sending their work into an LLM from a privacy standpoint. Lastly, I worry that the sycophantic nature of LLMs is also a problem: it can create a false sense of confidence. It’s hard not to be seduced by an LLM saying “great job” or “you’re all set.” Then, we hit a wall when we get other feedback that gives a very different perspective.

My position has been essentially to tell students “You can certainly run your work through an LLM and ask for feedback” and then stop there. It’s not a particularly nuanced position.

I’m constantly revisiting about whether— and how — LLM-generated feedback can be worked into the classroom more intentionally.

That why, the other day, I watched a webinar offered by

and her research team.They have been conducting a research project at UC Davis since 2023. They’ve been developing the PAIRR system for AI-assisted feedback in the writing classroom.

Here’s what that is.

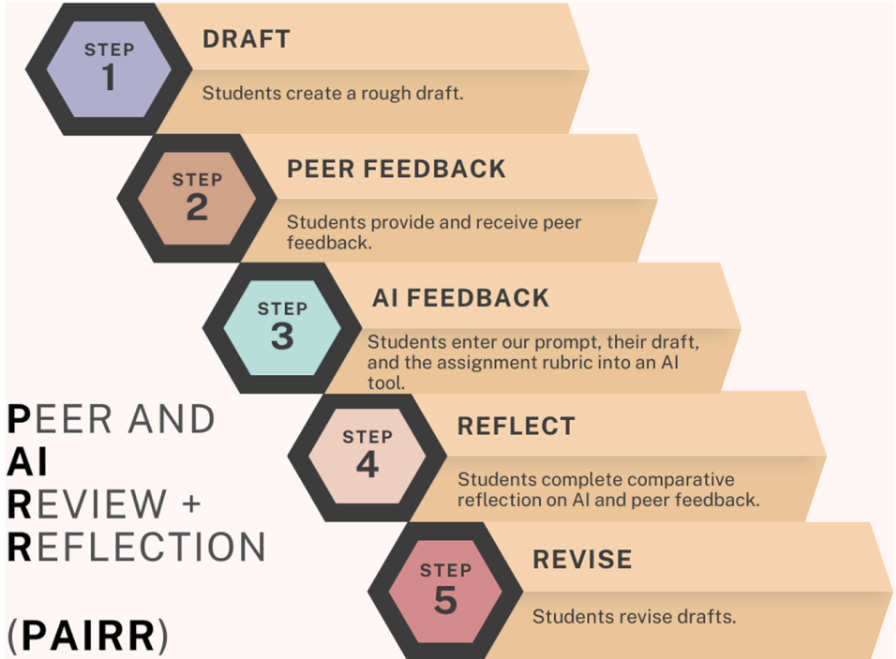

What is PAIRR?

PAIRR stands for:

P - Peer Feedback

AI - AI Feedback

R - Reflect

R - Revise

Essentially, a student completes a draft, gets human-created and LLM-generated feedback on it, and then takes a step back to reflect on the process and make changes.

Here’s an image from their materials:

The idea is that students get different sets of feedback, and stitch them together into their own revision process. It forces them to go beyond just accepting edits, as they might with a program like Grammarly. Instead, to revise meaningfully, they need to cut between the different sets of feedback and make decisions about the best way to proceed.

For those who are interested, I’m sharing some of their other materials:

Click here for the recording.

Click here for the slides.

Click here for the materials packet (including prompts).

The last resource includes what prompts they’ve used for their custom chatbots, to encourage them to provide constructive, course-aligned feedback.

What I’m Doing

I really like the core idea : we can teach students to interrogate the connections and gaps between different sets of feedback. It’s a pivotal skill, not only for the classroom but for work and life.

So, I’m trying something.

In my 7-week online course (Comp II) at Berkeley College, I’m developing a teaching sequence.

In week 5, students will:

Design their own projects.

Design their own Self-Empowering Writing Processes (SEWPs) for completing those projects.

Implement their process in a rough draft.

Then, in week 6, they will:

Design rubrics (with the help of a custom chatbot).

Self-assess the rough drafts.

Peer assess the rough drafts.

Get AI assessments on the rough drafts.

Assess the different kinds of assessment.

I am developing the rubric-creating chatbot now. My goal is to encourage students to assess outside the context of traditional grades, focusing on self-improvement rather than numbers and letters.

This is a chance for me to think more deeply and specifically about how LLM-generated feedback can work. It’s also a chance to link peer review to the development of metacognitive skills.

I will report on how things go.

A Quick Announcement

On Thursday (July 17th), I will be co-hosting a free webinar on building a culture of transparency on the classroom.

I’ll cover the differences between first-person and third-person transparency, and what each means for how we design our learning experiences.

I use primarily first-person transparency, asking students to announce their specific uses of GenAI. A company (which I have no association with) will focus on third-party transparency.

It’ll be an open conversation.

If you’re interested (or want the recording), here’s the link:

https://www.visibleai.io/workshops-and-resources/ai-as-a-co-pilot-redefining-student-collaboration-with-technology

Appreciate the nuanced take and linking to Anna Mills’ great work. It feels like experimenting with different options is just the world we’re in right now, so it’s nice to see what others are trying out. (I also appreciated your post on AI free spaces and referenced it in my own substack recently). I’ll plug your upcoming talk with some colleagues who’re also interested in this stuff.

Without question I'm intrigued by the idea of students navigating different types and vantage points of feedback; indeed, this is why I work so hard to elevate peer workshops so that the feedback students receive is not entirely my own.

However, my wondering here is how to maintain integrity with "authentic" phase in the early stages of writing—particularly when done outside of class, at least in part? In the same way I do not want students leaning on peer feedback until we get to the workshop, I want them to first set out on their own as writers before they lean on other sources.

Curious what those early stages look like for you in this process—and of course always appreciate your openness in sharing your thinking and its evolving iterations. Very helpful for us secondhand learners, too!