Educators: It's Time to Lean In

Why Teachers Can No Longer Stay Silent About AI

Education is now front and center in the Great AI Discussion (I won’t say debate, though I could). The recent slew of updates from OpenAI and Google made sure of it.

Weren’t we already at the center?

Well, no, not really.

Up until this moment, most of the educators I know have:

Refused to take a stance

Pretended that they could ban AI and keep everything else

Focused exclusively on AI as an Academic Integrity issue

As Marc Watkins points out, “Far too many educators have checked out of the AI discourse.” And I understand why. Who has time to do one more thing (and one huge thing) on top of everything else.

But things have changed.

It’s no longer sustainable for educators to stay above the AI discussion or to focus primarily on banning AI—if such a thing were even possible.

Both OpenAI and Google released updates geared specifically to education. And this makes sense. Sam Altman recently talked about his excitement about getting into the AI Tutoring space. And in a recent demo, OpenAI brought in Sal Khan, the creator of Khan Academy:

(Sidenote: Please don’t get too excited about this demo. It’s impressive, but there are huge glaring problems here. It makes quite a few missteps even within a few short minutes.)

I don’t think I can emphasize this enough.

For better or worse, education is one of the primary use cases for this technology. And companies like OpenAI and Google know it. One of my favorite Substack writers,

, also recently wrote that Google’s LearnLM has massive potential.Of course, this is only if the products mostly live up to the demos.

That hasn’t happened yet, at any stage. The demos have always outdone the actual products.

But if the products can get anywhere close, even with further iterations, that would be huge for education.

So, what’s the problem?

There's a gap between what these AI tools offer and the needs of the modern classroom.

That's why the voices of teachers and students are sorely needed.

Based on what I’ve seen, these products focus on a single student (who is usually incredibly proficient) and a single use case. That’s why

argues that the Khan tutor will likely be good for certain students, under certain circumstances, for certain subjects.Let’s be honest. Actual, flesh-and-blood teachers don’t have those students, those circumstances, or those subjects 90% of the time.

There’s a gap between the student showcased in these demos and the conditions of the actual class, regardless of what level we teach. I’m a college professor. Even at my level, there is a huge need for teaching foundational reading and writing skills. (It’s not a good or bad thing. It’s just the way it is. That’s the job.)

The pedagogy isn’t inclusive.

What happens when:

A student refuses to look at the screen?

A student isn’t engaged in this process?

A student hasn’t done the homework at all?

A student doesn’t know what questions to ask?

A student really struggles expressing themself?

I’ll be honest. If I were a 15-year-old trying to learn Geometry, the Khan bot would not have worked for me.

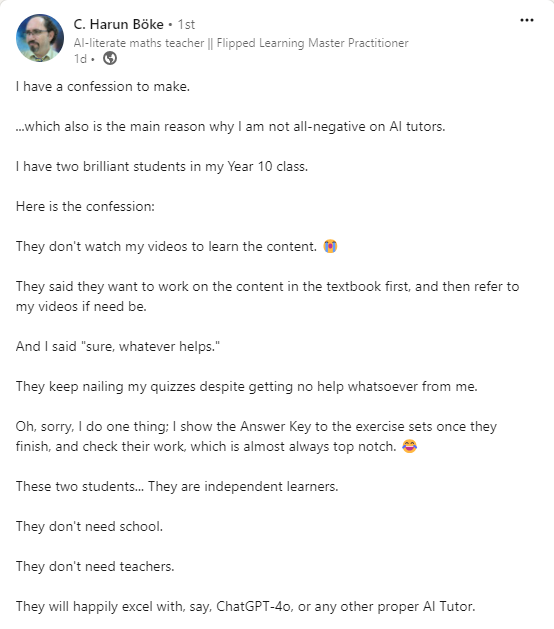

And that’s the thing. These AI tutors will work for some students some of the time, and leave out others. That’s why C. Harun Böke, an expert math teacher and expert on the flipped classroom, writes this:

Nailed it.

AI tutors have a very specific use case right now. Maybe that will change, and maybe it won’t.

Much the same can be said about these AI-driven education products in general. They say that they personalize education for students, but then deliver a one-size-fits-all approach.

But that’s not how these products are being sold. They are being sold as the most patient, most competent teachers in the world.

That idea violates the cardinal rule of good teaching: there are no silver bullets. The same goes for AI. It’ll be a useful tool, no doubt. But announcing that AI will save education or solve the education crisis misses the mark.

This is my hope

My sincere hope is that the recently updates from OpenAI and Google put the pressure on educators and schools. Now that we’re front and center in the AI discussion—whether we wanted to be or not—we have to ask some tough questions:

Do we want this technology? If there is any chance of teachers pushing against certain uses of AI, the time is now.

What are the consequences (intentional and unintentional) of anthropomorphization-at-scale? OpenAI’s main goal is to make ChatGPT sound like a person. That will have a huge effect on how our students see the world.

What does this mean for human connection? The loneliness epidemic among students (especially college students) is well-documented. Is AI going to supercharge that, along with everything else?

Do these AI tools comply with current regulations for schools? Many times, the answer is no. They often risk violating FERPA and other privacy regulations, as well as current accessibility standards.

It’s our chance to speak up, not only about what corporations like OpenAI and Google are doing with their AI products, but about the value proposition of formal education.

I’m keeping my fingers crossed that the recent updates will force this kind of conversation, which is long overdue.

Now’s the time to speak out

Teachers, parents, and students need to lean in more than ever. Educators have a wealth of knowledge, not from simply reading about pedagogy but from actually acting as practitioners. We need to hold onto that knowledge, often ignoring the glow of the technology to focus instead on our students and how humans learn.

We have a set of skills and experience that, right now, many of these programs seem to lack.

So, it’s up to us to actually step in and speak out for our students. Because if we don’t, these products will be worked into classrooms without our input.

And we also need to get students into the conversation:

Do they find these AI products helpful?

Are they worried about AI tutors for any reason?

If we’re not listening to them, we’re going to lose our way.

What can actually move the needle?

Here’s where I’m at.

I don’t think that these AI products can move the needle. They have severe limitations, in terms of their pedagogical soundness and their actual adaptability.

I don’t think this technology will significantly impact education until students are empowered to build. In this, I’m in-line with Mike Yates:

Let’s lean into this:

Personalization isn’t individualization: you can personalize education with AI, while focusing on community rather than splintering it.

AI for the sake of AI is meaningless: our students don’t need shiny objects; they need pedagogically sound products.

So maybe, if we can harness this technology to actually empower students to create and build, we can actually move the needle.

But I don’t see many companies doing that right now.

They may need a push.

Great post, Jason. Thanks for your urgency! I agree that educators can't just shy away from AI tools and pretend they don't exist. By fall, students will have the world's most advanced language models at their fingertips. But I don't think we've hit an inflection point as yet. For one, hallucinations are a big concern and neither OpenAI nor Google explicitly addressed this issue in their announcements. Teachers are understandably leary of introducing AI that spounts inaccurate information to their students. Teacher discomfort with AI is already high and I don’t expect them to cheerily embrace AI in their classrooms simply because it offers new personalization features for their students. There is still a lot of work to be done in trying to get teachers to look beyond AI simply for automation.

I find it interesting that you seem to portray Khan Academy as outsiders, not educators, and therefor lacking pedagogy. Khan Academy opened in 2008 and as of 2022 had 120 million registered learners in 90 countries. Students have completed over 10 billion practice problems and watched over 1.5 billion instructional videos on the platform. They partner with organizations like NASA, The Museum of Modern Art, The California Academy of Sciences, and MIT. They are non-profit. Of course, Khanmigo isn't perfect, but I'm extremely impressed with what they are trying to do and the number of students they have helped, for free. It is easy to be critical, but I suggest you take a closer look at their mission and what they have accomplished so far.